How To Start A/B Testing on Shopify (The Easy Way)

Conversion rate is everything in ecommerce—it can spell the difference between expensive inaction and healthy revenue.

Some of your success relies on how well you acquire customers, refine ad audiences, and even keep up with industry trends.

Although it may be tempting to copy competitors or throw pasta at the wall to see what sticks, there are pitfalls to those methods.

Copying competitors means you may be following tactics that work for them but won’t for you. It also puts you out of the running for innovative discoveries by default since this technique relies on copying others.

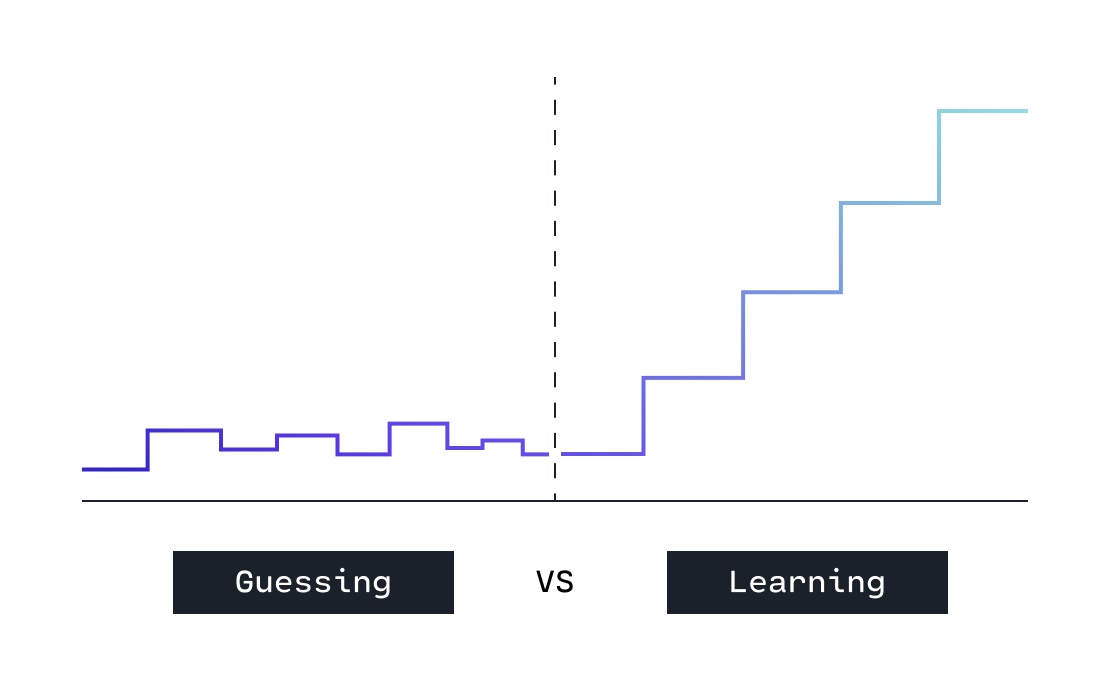

Throwing pasta at the wall to see what sticks means you waste your team’s time instead of learning and iterating.

It also means you’re always starting from the same baseline (guessing) instead of continuously improving based on what you’ve learned.

Instead, we encourage merchants to use A/B testing to find what works best for their stores. This method of improving conversions provides the best chances of turning shoppers into buyers.

By using A/B testing, you can find out what works for your specific site, SKUs, and shoppers, at every step of the buyer journey. This article will look at how to implement A/B testing for your Shopify store and what to do with your learnings.

We’ll cover:

- What is A/B testing?

- A/B testing vs multivariate testing

- Who should A/B test in Shopify?

- How to start A/B testing in Shopify

#cta-visual-pb#<cta-title>Start A/B testing on Shopify<cta-title>Create pages and run A/B tests on Shopify with Shogun–a natively integrated ecommerce optimization platform.Start testing today

Before we dive into the how of A/B testing, let’s cover the basics of what A/B testing is, particularly in ecommerce.

A/B testing in ecommerce—also known as split testing—is a method of presenting website visitors with two versions of the same page.

As you A/B test, you monitor conversion data to see which version gets visitors to take the desired action (your call-to-action).

This way, you can make data-driven decisions to improve your Shopify store’s performance and growth continuously. Without that data, you end up guessing and hoping for the best, which often takes longer to produce results and leads to more mistakes.

When A/B testing in Shopify, 50% of visitors to a certain page will see version A while the other 50% will see version B.

Version A should be the original version of a page, known as your “control.”

Version B is your “variant.” If the variant outperforms your control, then version B becomes your new control, and you create another variant to continue the process and improve results.

The ultimate goal of A/B testing is to derive greater value from the traffic you already have.

A/B testing has a few essential elements:

- A/B testing must be randomized, so no cherry-picking who sees what when they land on your site. Randomization minimizes the chances that other factors, like mobile versus desktop, will drive your results.

- You must run your testing for a minimum amount of time to give yourself enough data to verify a conclusion. Keeping the timelines uniform across every test also ensures factors like fewer active days do not skew results.

- A/B testing is not a one-time thing. You must continuously test and refine your page elements to stay on top of trends. Consumer behavior changes over time, which means your successful call-to-action (CTA) or banner this month might tank the next.

Why A/B testing matters in ecommerce

An educated guess is still a guess, which means you aren’t basing your decisions on observed and quantified behavior.

A/B testing in Shopify is critical for discovering what drives action in your target audiences. The changes made with each variant are often small enough to determine what had a positive or negative impact.

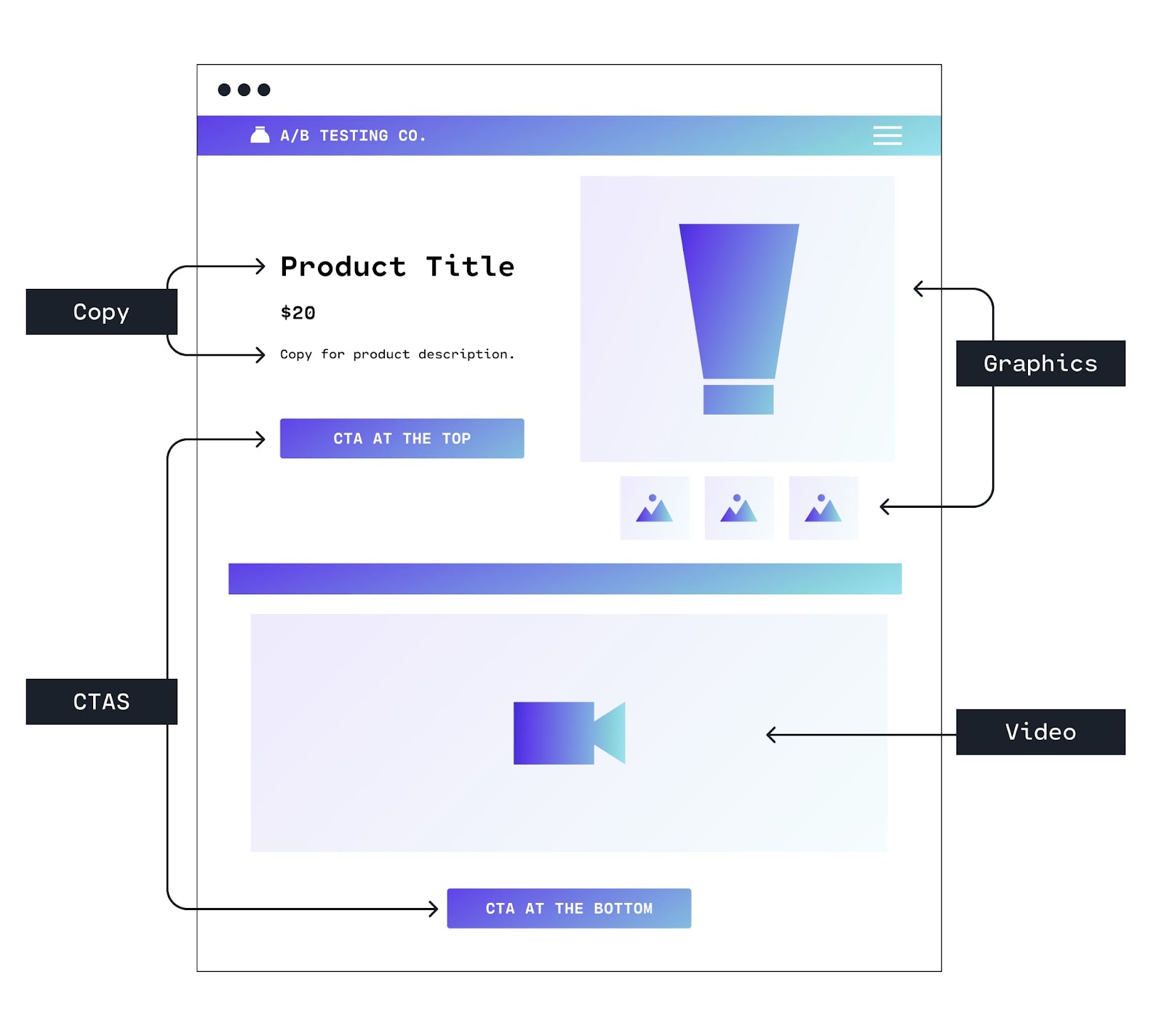

Through A/B testing, you can uncover changes to copy, images, CTA, or testimonial placement that will help you build better ecommerce landing pages.

Beyond improvements to specific product conversion and checkouts, you may uncover insights that help coax your shoppers through the buyer’s journey.

For example, uncovering specific copy that consistently leads to conversions could help you rephrase your value proposition and reduce bounce rates on key landing pages.

The main benefits of using Shopify split testing include:

- Improve content engagement

- Reduce bounce rates

- Boost conversion rates

- Reduce cart abandonment

- Increase subscriptions/opt-ins

Improve content engagement

You can increase conversions through optimization when you understand what content and contexts encourage your audience to take action.

You can track this engagement through analytics like email newsletter sign-ups, CTA click-throughs, items added to cart or a wishlist, link shares, and purchases.

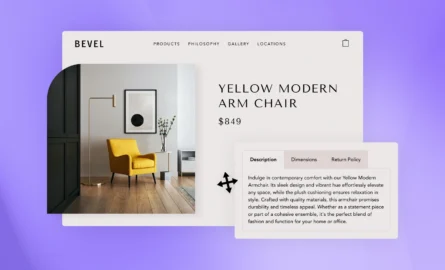

If you’re split testing to optimize your product page, for example, your variant may use a new image or a new product description with more colorful copy.

You then serve these versions randomized in equal measure to your traffic for a set period and compare the engagement rates.

The best-performing version can then be implemented.

Reduce bounce rates

Bounce rate tracks how many visitors leave your website after viewing a page. One of the biggest red flags that your content is falling flat is a high bounce rate.

Luckily, Google Analytics makes this easy to measure.

A high bounce rate indicates your store page is unengaging or not what visitors expected. Meanwhile, a low rate suggests you’ve captured their attention, and they want to see what you have to offer.

Using A/B testing, you can reduce your bounce rate to improve your search ranking and ensure you deliver an engaging experience for your shoppers.

Boost conversion rates

Conversion rates can refer to both your marketing campaigns and your on-page conversions. Seeing a lift in either is key to growing your traffic, your sales, and your business.

A/B testing can help you find which campaign copy and images work best to get click-throughs to your website and then subsequently what page elements work best to get visitors to make a purchase.

Look at boosting conversion rates in the following places on your website:

- Ad landing pages

- Homepage

- Product pages

- Checkout page

When you optimize your content through A/B testing to find what performs best, you enjoy more conversions and greater overall success.

Reduce cart abandonment

Almost 70% of shoppers abandon their carts before making a purchase.

A/B testing will help you discover and remedy the main reasons for cart abandonment, whether your CTA wasn’t prominent enough or the photos didn’t showcase your products’ value.

Reducing cart abandonment can help you lower your customer acquisition costs by ensuring more of your visitors make it to checkout.

Increase subscriptions/opt-ins

Finally, A/B testing can help you gain more subscribers for your newsletter or other initiatives. This targets visitors who may become buyers in the future but aren’t ready yet.

By boosting subscribers, you grow the relationship and nurture these leads until they’re ready to convert.

Case study: How WallMonkeys increased conversion rates with ongoing A/B testing

WallMonkeys sells diverse wall decals. They set out with a simple goal: to improve clicks and conversions on its homepage.

To improve traction from the homepage’s CTA, WallMonkeys ran an A/B test comparing the original stock photo with something more whimsical that was a better fit for the brand.

That small change increased conversion rates by 27%.

The next variant replaced the homepage slider with a prominent search bar, making conversions soar by 550%.

Keep in mind those kinds of results aren’t guaranteed. A/B testing is an experiment, so results can and will vary.

Your own results will be based entirely on statistical analysis and what you do with the data you uncover.

Shopify A/B testing removes much of the guesswork of growth and improvement while also significantly reducing the time typically spent comparing the performance of changes to your store.

A/B testing and multivariate testing are closely related but critically different.

Multivariate testing tests several elements of a web page simultaneously and compares the updated result to a control version.

For example, you might change your header image, CTA text, and button color all at once, then test this updated version of your landing page against your previous one.

Chances are, you’ll see some differences in customer behavior between the two, but since they were tested together, you won’t know which change was responsible for what percent of the results.

A/B testing multiple on-page components

If you want to test multiple changes to your page, we recommend an ongoing A/B testing strategy that looks like this:

- Create an A/B test with your first change – for example, your header image. Run your test for a predetermined amount of time and track your results. Whatever version of your page performs best becomes your new control.

- Using your new control, create an A/B test with your next change – for example, a new CTA. Run your test for the same predetermined amount of time, tracking your results carefully. Again, whatever version performs best becomes your new control.

- Finally, using your latest control, create your next A/B test with your final variable – for example, your button color. Run your test for the same amount of time and track the results. Whichever version wins can become your new, updated page.

It’s possible one change drove positive results while another produced a negative outcome.

What’s important is you can quantify the impact of every change individually to understand what works for your ecommerce site, your customers, and your business overall.

#cta-visual-pb#<cta-title>A/B testing software for Shopify merchants<cta-title>Create pages and run A/B tests on Shopify with Shogun–a natively integrated ecommerce optimization platform.Start testing today

It’s important to note that A/B testing can be done incorrectly and thus can be detrimental to your website and business.

In some instances, A/B testing may not be a good fit for your store or is a poor use of your time.

Here are some guidelines to understand whether or not you should run A/B testing.

Do you have a large enough sample size to get reliable results?

There’s a reason why scientific studies need considerable numbers to be of statistical significance. Conclusions require enough results to verify the findings aren’t random.

If you only have a few monthly visitors to your site, it’ll be challenging to conduct testing and derive any statistically significant results.

For example, if you have only 20 visitors to your website in a given month, a single rogue visitor could skew your results significantly. Or, you could end up waiting half a year for enough visitors to generate data.

So, if you run a low-traffic site, A/B testing is probably not the best strategy for you.

Instead, talk directly to your customers and conduct customer interviews and user testing. With smaller numbers, this personalized outreach is manageable and highly effective.

As traffic consistently grows, you can incorporate A/B testing for your highest-visited pages, like your homepage and checkout page.

Not sure how large a sample size you need for your tests?

Check out this calculator to help you understand the minimum sample size required to run your A/B test.

This tool allows you to input your current (baseline) conversion rate and then a minimum detectable effect (MDE). The MDE is how much you want to increase your conversion rate.

For example, with a baseline conversion rate of 3% and a 25% MDE, the calculator shows a minimum sample size of 8,408 visitors per variation. With a standard A/B test, that product page would need at least 16,800 visitors.

Have your conversion rates suddenly dropped?

It’s every ecommerce professional’s worst nightmare—a sudden drop in conversion rates that can’t be attributed to the standard peaks and valleys of seasonality.

If you recently changed your website, landing page, pricing, or discounts, it’s possible the change isn’t working.

Begin by looking at any alterations you’ve made recently and reverse them. If you see your conversion rates return to normal, you’ll immediately know your change was the culprit.

This gets tricky, though, if you made multiple changes. In this case, you can begin A/B testing to see which one caused the problem.

Remember: Test one change at a time so you can be sure whether it improves or lowers conversion rates.

Are you investing more in customer acquisition?

If you’re doubling down on your customer acquisition strategy and spending more on ads, A/B testing can help your money go further.

Since your investment aims to increase the number of visitors you get, you should leverage A/B testing to ensure those visitors take the actions you want.

Ongoing testing helps you optimize your ads, increase click-through rates (CTRs), and close more sales.

To A/B test during customer acquisition, you can try a few things:

- A/B test your ads, presenting different CTAs or graphics, and send all traffic to the same landing page.

- Use one ad and send 50% of traffic to a version A landing page and 50% to a version B landing page.

You can even A/B test different elements based on acquisition channels. If you’re investing more in social media ads, consider testing shortened landing page copy and a faster path to purchase.

If you’re investing more in your SEO, observe the pages that organic search visitors clicked on the most and A/B test those.

For example, if most blog visitors go to the FAQs page next, test different featured questions at the top of the page.

Why timing is important with Shopify A/B tests

In the words of Buck Brannaman, “Timing is everything,” and A/B testing is no exception.

The length of time you run your Shopify A/B tests will vary, but ideally, you should run each test for at least two full business cycles.

This is important because…

1. Customer shopping habits can change based on the day of the week

According to SalesCycle, the most popular days for online shopping in 2019 were Monday and Thursday, and Wednesday and Thursday in 2020, with peak hours between 8 p.m. and 9 p.m. and a significant drop-off after 10 p.m.

In short, you can’t run a test for just a day or two.

Because customer habits can uncover trends, running your test for a longer predetermined period of time allows you to gain more customer insights while also ensuring your test will not be impacted by one-day peaks or valleys.

2. Certain customer segments may only shop on specific days

Not all ecommerce shoppers are regulars.

Some may only shop at certain times of the year or on certain days of the month, depending on the type of products they’re purchasing or where their payday falls.

These anomalies can skew testing results significantly.

3. External factors like weather, seasons, events, and holidays can significantly impact sales

Cyber Monday is the biggest online shopping day of the year, with November, December, and January being the busiest ecommerce months.

Consumers prepare for the holidays from Cyber Week through Christmas and then deal-hunt throughout January for blow-out sales.

4. Customers may window shop and return later

As mentioned above, traffic alone can’t be your only source of data, as many customers comparison shop.

In fact, customers will visit three ecommerce stores on average before making a purchase.

5. You can account for various campaigns and anomalies like your newsletter dropping

Logic dictates you’ll see spikes in traffic and sales when you launch your regular newsletter or push special campaigns.

These sudden spikes occur at very specific times, and if your testing doesn’t capture them, you could end up with inaccurate results that leave you worse off than when you started.

Run your tests long enough to show statistical significance rather than stopping the test when you reach X number of conversions. This way, you’ll reach your predetermined sample size with enough time to prove whether or not your changes were effective.

If you stop too soon, let the test run too long, or schedule it during something like a major holiday shopping season, the validity of your test goes out the window.

While you can’t entirely eliminate these influential external factors, you can minimize their impact by running tests for full-week periods through multiple business cycles.

What to A/B test in Shopify

The beauty of A/B testing is that you can test many things in Shopify to optimize your landing page, increase conversions, and realize growth.

Copy

Copy is all the text on your website. Beyond the actual text and what it says, consider testing the following:

- Font: Is the font legible and easy to read? Does it stand out on the page? Should it be made larger or smaller? Should you change the color?

- Length: Are text blocks too bulky or too small? Will shorter, snappier copy increase conversions?

- Location: Where does copy currently sit on your site? What percentage is above the fold versus below?

- Verbiage: Is the copy easy to understand for your expected target audience? Is it too technical or not technical enough?

Colors and theme

Color psychology can affect your Shopify store’s look and feel.

According to KISSMetrics, a whopping 85% percent of customers say color is a primary reason for buying a product.

With this in mind, making changes to your store’s color scheme or theme can have a huge impact on your conversions.

You can play with color in several ways, from buttons to fonts, overlays on visuals, and even your store background. Be sure to make one change at a time to accurately track what works best.

Graphics

Changing your graphics can be a billion-dollar win—just ask Aerie.

Sales skyrocketed when the retailer removed all altered photos from its advertising and website. Although the average Shopify store won’t have the same results as Aerie, updating your graphics and images is still worth testing.

Outdated graphics, for example, could deter potential buyers, while fresh, new visuals can reduce your bounce rate and increase conversions.

Structure or layout

The structure of your Shopify store’s landing and product pages can make or break the customer experience.

Changing the page structure is one of the larger elements you might decide to test, so it’s extra important to keep all content exactly the same.

When it comes to testing the structure or layout of your Shopify store, you could try:

- Moving graphics to be more prevalent and copy to below the fold

- Adding more product photos/graphics by using a grid layout

- Altering where buttons are located

#cta-paragraph-pb#Use Shogun to create unique page layouts and run A/B tests with variants assigned a percentage of the traffic.

Video and interactive elements

When it comes to content, especially ecommerce product content, video wins conversions.

A product video helps your potential buyer see the item in use and allows them to listen and learn instead of just reading about it.

Adding a video above the fold on your Shopify store can increase engagement, boost conversions, and reduce your cart abandonment rate while simultaneously expanding your brand awareness.

Consider replacing a static graphic or text box with video in your A/B testing and see which performs better.

CTA

Look at your CTA text, location, color, button style, and interactive elements.

Based on what you want customers to do, should the CTA be “Add to Cart” or “Get it Now”? Maybe you need to build urgency in your CTA, such as if a sale advertisement redirects to the Shopify landing page.

Or, should you test out different colors for your CTA? If you use red because it’s your brand color, could people be associating your CTA with a stop sign or danger?

Perhaps your CTA is hidden, so it doesn’t look like a buy button and gets lost on the page. In that case, you should move it to a place with more white space where it’s more prominent.

Maybe when someone hovers over your CTA, their cursor doesn’t change to show they can click on it. In that case, you can test out interactive elements, like adding a hover effect on the button to indicate it’s clickable.

CTAs are incredibly versatile and have so many elements to test, but it’s critical that you only include one per test.

Remember: Test one thing at a time

To A/B test properly, you must go slowly to achieve fast growth. Even the experts take care to test a single variable at a time to get reliable results.

For example, if you compare two CTAs and two Shopify store structures, you won’t know which is causing the changes you see in customer behavior.

A/B testing is best done with just A and B, not the whole alphabet. Hence the name.

As with so many things in business, sometimes understanding how to get started can be the hardest part.

To begin testing in Shopify, you need to clearly define your question, the goal you’d like to achieve, and what you’ll consider “success” to be.

First, look at your ecommerce page analytics.

For the sake of this example, let’s pretend your monthly traffic is 100,000 users and you have a bounce rate of 70%. This means 70,000 people leave your landing page without completing another action.

You might think, If I change my header image from a black-and-white stock photo to a colorful picture of my products, I can reduce my bounce rate from 70% to 50%.

Now you have a baseline, a hypothesis, and a target.

Special note: A/B testing at checkout

Standard Shopify users cannot modify the checkout funnel and instead use the checkout.shopify.com domain.

Because there’s cross-domain traffic during checkout, cookies are necessary for Shopify A/B testing tools to keep track of your users.

Unfortunately, some users block cookies. Likewise, some browsers (like Safari) may be set to block cookies by default.

If cookies are blocked, you automatically exclude a portion of users from your split testing. That can skew your results significantly if you’re factoring conversions and revenue into your tests.

For Shopify Plus users, it’s markedly easier.

Joshua Uebergang, author of Shopify Conversion Rate Optimization, provides an easy option for those familiar with coding:

“It is technically simple to run split-tests of visual elements on Shopify Plus stores. If you want to test ‘Add To Bag’ text on the button of your product page against ‘Add To Cart,’ you create a jQuery that selects a unique CSS identifier inside your favorite split-testing tool.”

Power your Shopify A/B tests with Shogun

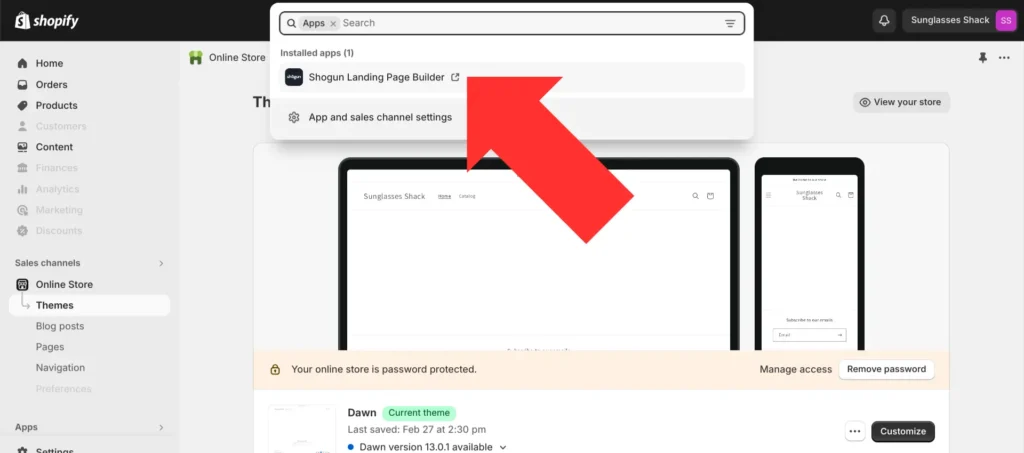

Shogun allows you to create, evaluate, and complete A/B tests without writing a single line of code.

With Shogun, there is no need for a URL redirect to run a test and no “flash” of content when the variant page loads. Your shoppers will have a crisp user experience–even when you’re running many variants.

Another benefit to using Shogun is the visual editor, which allows marketing teams to quickly spin up new test pages and as many variants as needed.

The editor contains a grid-based design element that allows for super flexible page layouts. Simply arrange content on the page visually to create the perfect on-brand assets.

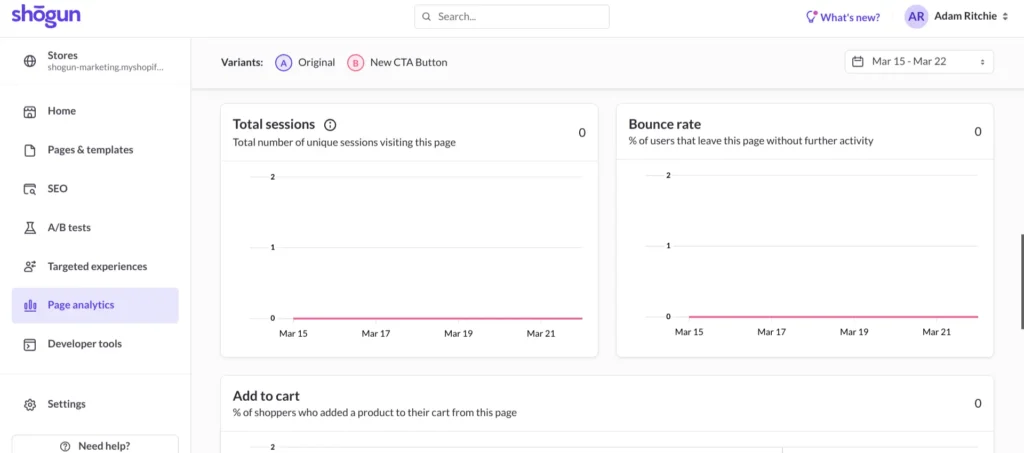

It’s easy to measure A/B test performance as well. With Shogun’s built-in analytics capabilities, you can view metrics such as bounce rate, conversion rate, top clickthrough destinations, and more for each of your variants whenever you want.

Yet another benefit to using Shogun is that you can manually adjust the amount of traffic that each page variant receives. This allows you to control for risk, as there’s always a chance that the changes you make to a page will end up having a negative effect.

By directing more traffic to the original version of a page and less to the new variants, you can ensure that fewer visitors will be impacted if your test goes wrong.

To demonstrate just how easy it is to use Shogun for A/B testing, let’s walk through that hypothetical scenario mentioned earlier of testing out a new CTA button on your homepage to see if it lowers your bounce rate:

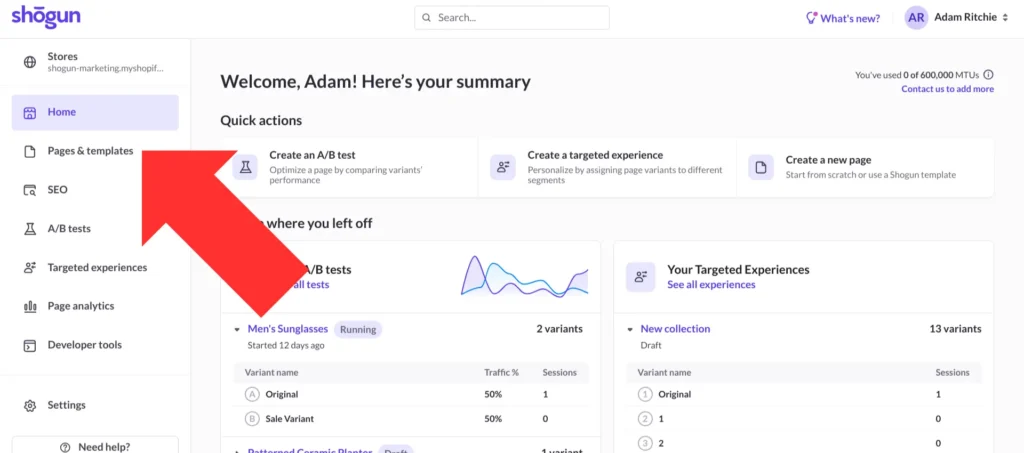

Step 1. Go to the “Apps” section of the main Shopify dashboard and open the Shogun app.

Step 2. Select “Pages & templates”.

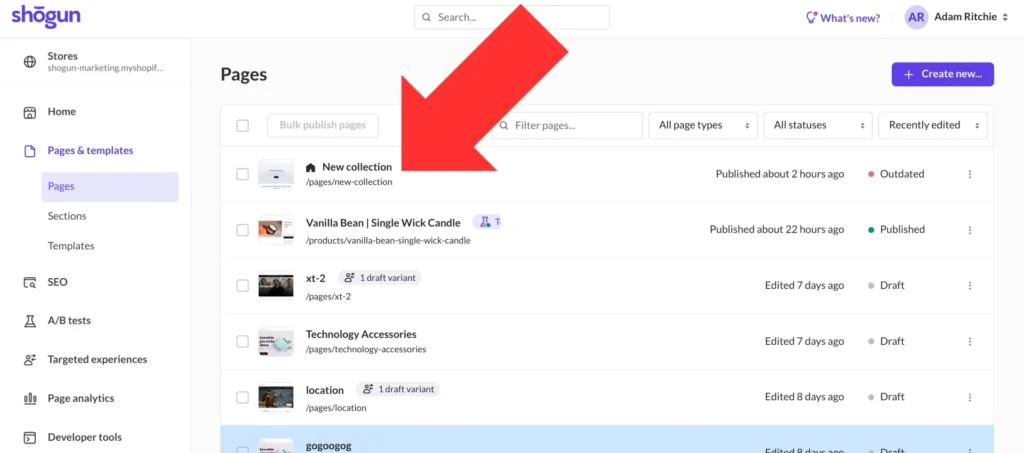

Step 3. Select your homepage. It should be noted that A/B tests can only be run with Shogun-created pages. You can not use a non-Shogun page as your “original” page.

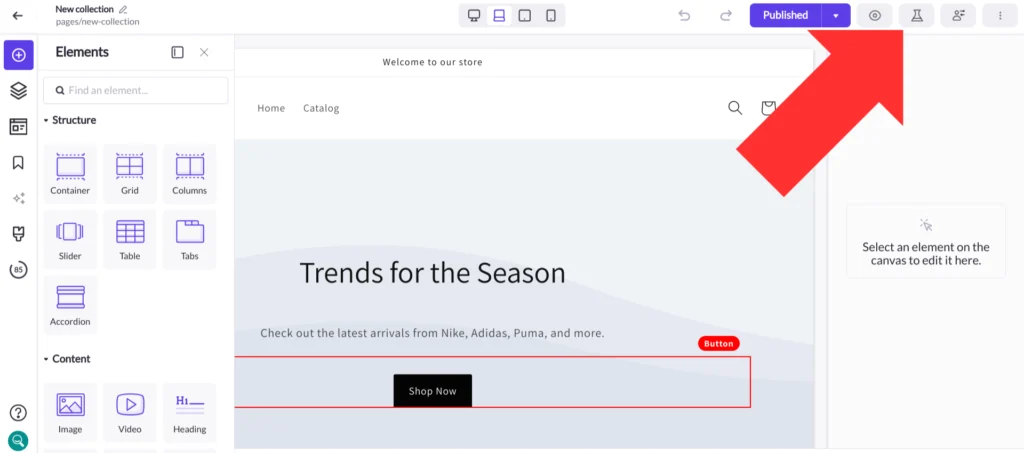

Step 4. Select the lab beaker icon that’s now in the top-right corner of your screen to open the A/B testing menu.

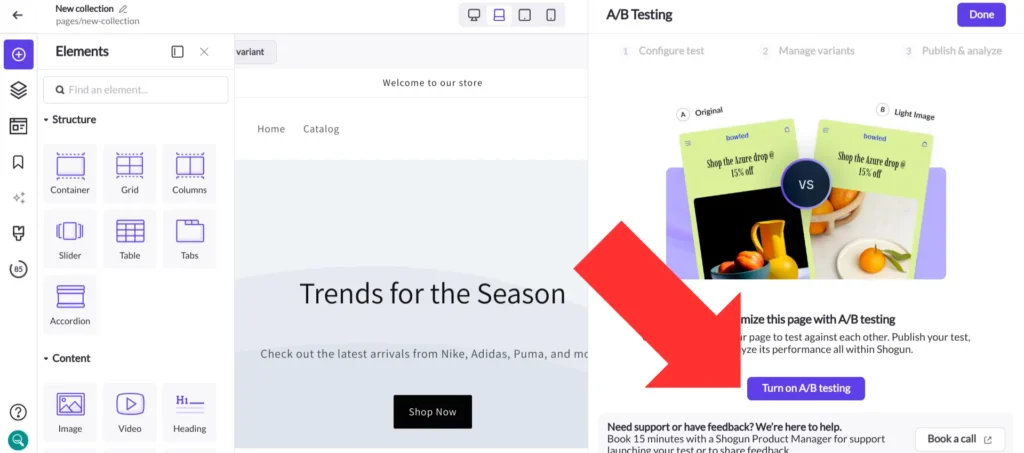

Step 5. Select “Turn on A/B testing”.

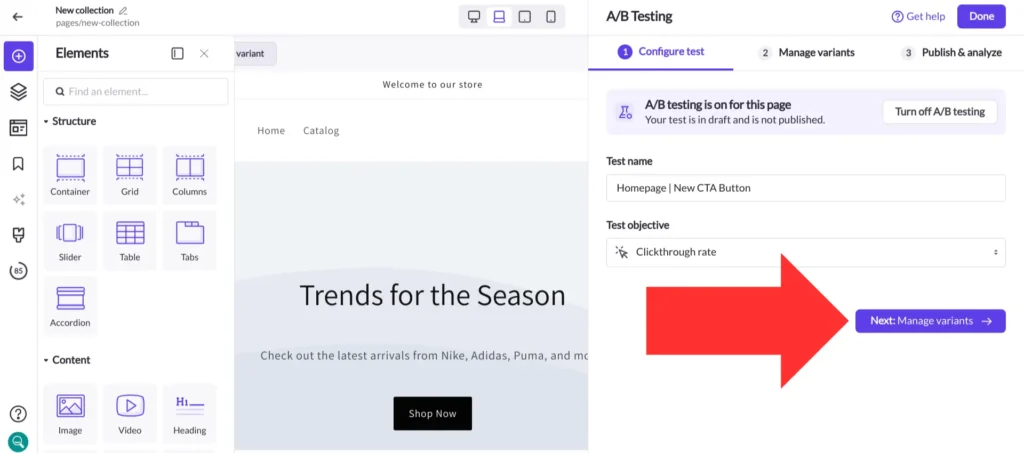

Step 6. Choose a name and objective for the test, then select “Next: Manage variants”.

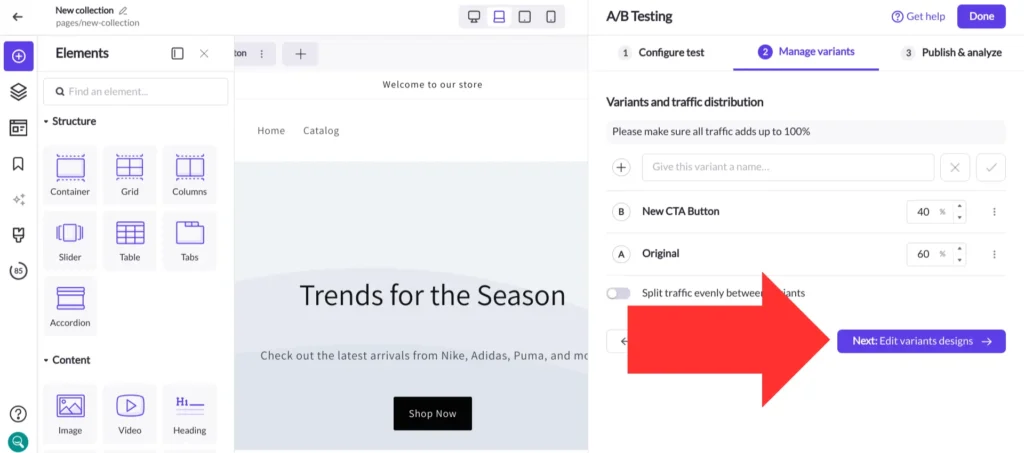

Step 7. Enter a name for your new page variant. At this stage, you can set up how much traffic goes to each variant as well. While we only need one variant for this A/B test, this is also where you would keep adding more variants if you wanted to set up a multivariate test.

Whenever you’re ready, select “Next: Edit variants designs”.

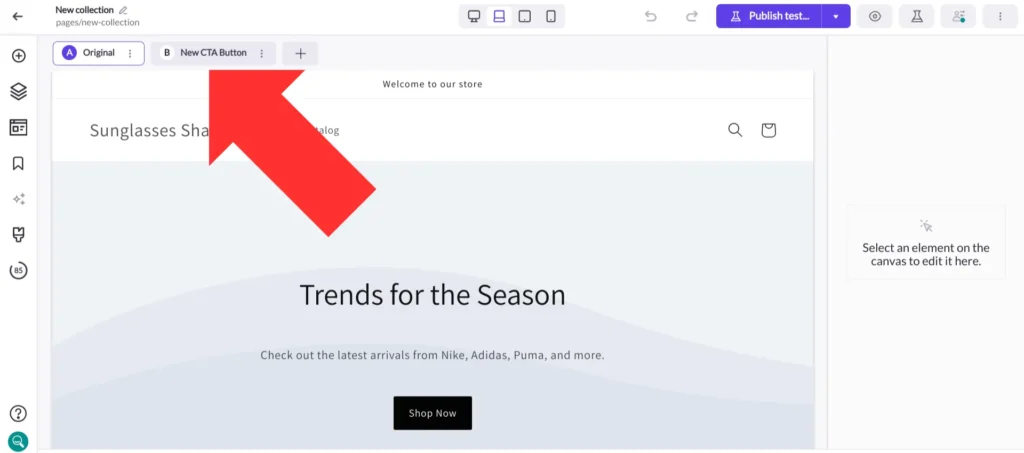

Step 8. You can use the tabs near the top-left corner of the screen to toggle between which variant of the page you’re editing.

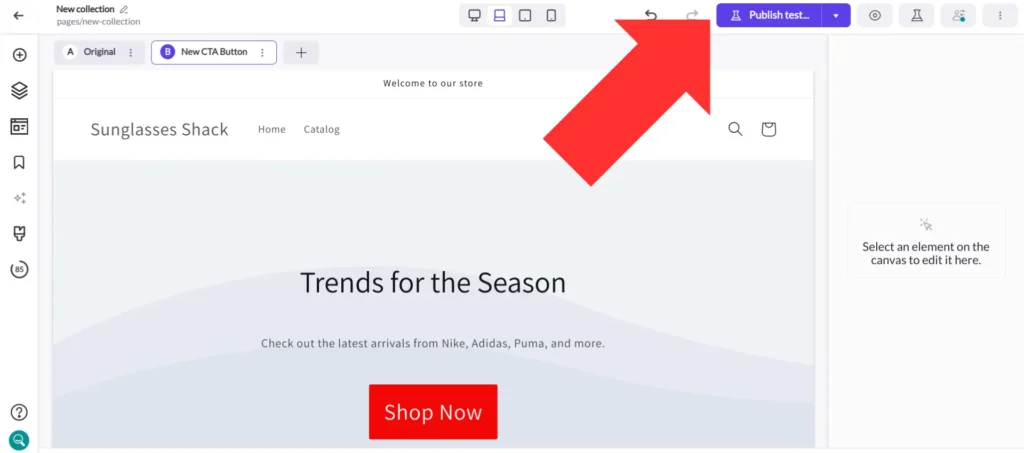

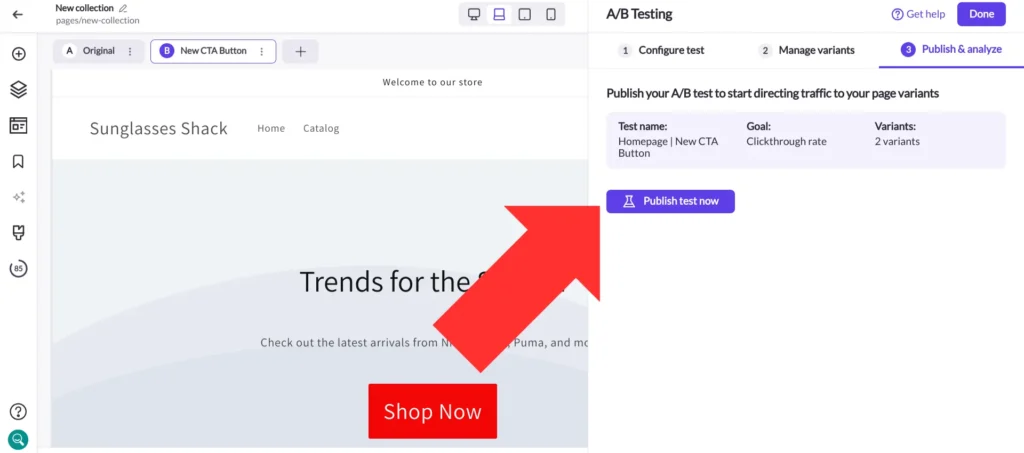

Step 9. Once you’ve finished editing your variants, save your changes and select “Publish test…”.

Step 10. After reviewing the details of your test, select “Publish test now”.

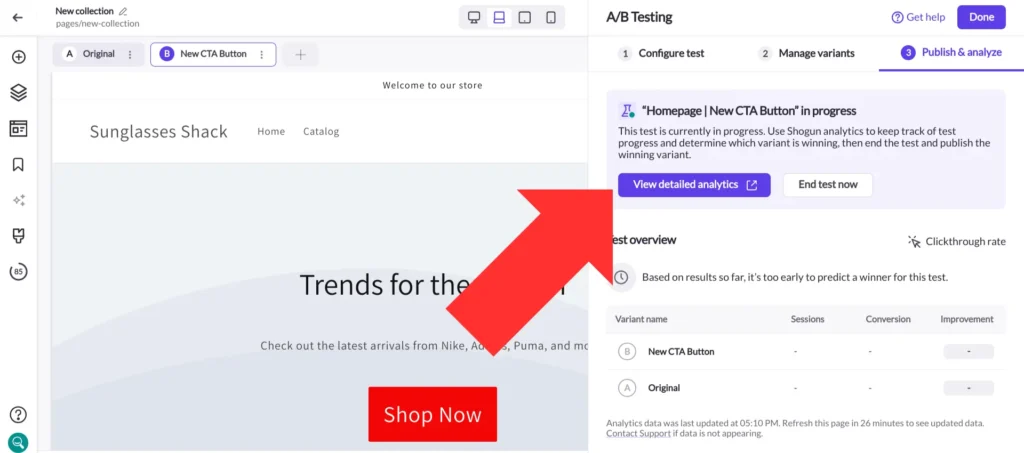

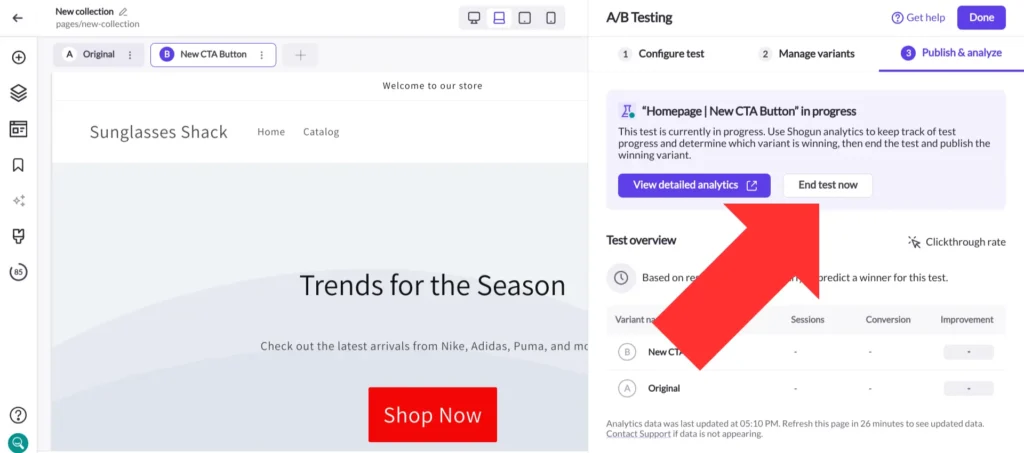

Step 11. You’ll be presented with a test overview that allows you to track how your different variants are performing at a glance. For more information, select “View detailed analytics”.

This is where you can find the bounce rate, as well as many other metrics.

Select “End test now” whenever you’re ready to finish your A/B test.

Step 12. Pick the winner of your test, then select “End test and publish”. All page traffic will now be directed to the winning variant. The other variants will still be saved in case you want to use them later, though. Also, you have the option of turning variants into templates that can be reused across your site.

That’s it! Pretty simple, right?

You can get started running A/B tests with Shogun by installing the app and starting a free trial on any plan.

Click here to explore A/B testing with Shogun

#cta-visual-pb#<cta-title>Start A/B testing on Shopify<cta-title>Create pages and run A/B tests on Shopify with Shogun–a natively integrated ecommerce optimization platform.Start testing today

Rachel Go

Rachel is a remote marketing manager with a background in building scalable content engines. She creates content that wins customers for B2B ecommerce companies like MyFBAPrep, Shogun, and more. In the past, she has scaled organic acquisition efforts for companies like Deliverr and Skubana.